Does AI actually 'unburden' journalists, like it was supposed to?

New research finds that journalists using AI do not think it always frees them up to do more creative work - but isn't that why we brought in the tech?

New research finds that journalists using AI do not think it always frees them up to do more creative work - but isn't that why we brought in the tech?

I remember as far back as 2019 – pre-ChatGPT explosion in 2022 – there being this narrative of artificial intelligence 'unburdening' journalists to be less bogged down by menial tasks and freed up to do the 'meaningful work'. That was the big selling point.

"It’s going to take the drudge out of the drudge work in many ways. It’s going to free journalists up to have more time to chase stories and create better content," Sky News' (then-senior product owner) Hugh Westbrook told me six years ago at a BJTC event.

Westbrook was then talking about groundbreaking technology that would make it easier to perform facial recognition, automate subtitle animations and research large datasets. It would be a massive timesaver and game-changer, he claimed. He was quite possibly right at the time.

But few could have imagined what was around the corner (besides a global pandemic). ChatGPT arrived like a mortar shell and put new technology in the hands of the masses. Suddenly, we can all generate text, images, and music in minutes. The quality of output is limited by your imagination, the precision of your prompts and whether you're on the premium plan.

I have lost count of the number of times the same narrative has been peddled by those talking about their new AI projects in interviews and journalism events.

At an NCTJ event in December 2023, Reuters' Jane Barrett talked about a 'first pass edit' that could cut down editing time. The Sun's Nadine Foreshaw touched on the time saved by using transcription and translation tech. Manjiri Kulkarni (ex-BBC News Labs) was looking at a jargon-buster solution to save time. Newsquest's Jody Doherty-Cove talked about how he was looking to automate FOI requests through the newly announced AI-assistant roles.

The rationale makes sense. Doherty Cove would double down on this at our Newsrewired conference in 2024: "We hope this will alleviate the burden on important but mundane parts of the job to give time back to traditional reporters".

What journalist wouldn't want an easier way to file a story in a CMS, fix their typos, put together a quick news story or locate their soundbite?

Or so we thought. There's new data to suggest otherwise.

New research from the Reuters Institute last month drilled into AI adoption among some 1000 UK journalists. Half of them are using AI now, while a sixth claim they've never touched it.

There's a slew of insights on which roles are using it the most, what types of tasks they're using it for, whether their newsroom builds their own tools, and whether they are afraid of the tech. All fascinating, all valid.

But here is the headline finding from my point of view:

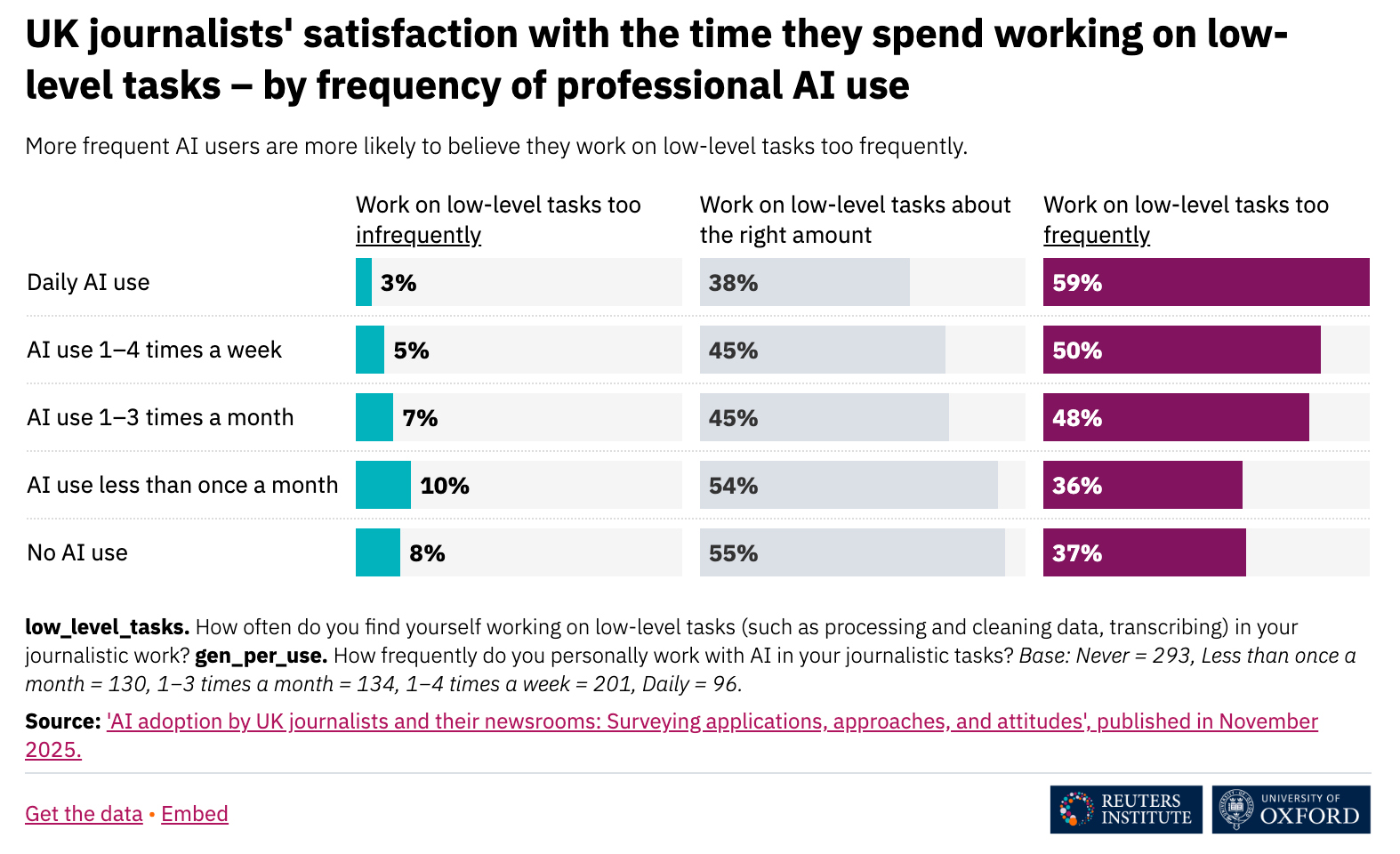

Has AI improved journalists’ job satisfaction? Those using AI more often are more likely to believe they work on low-level tasks too frequently and are not more satisfied with the amount of time they work on complex and creative tasks.

Hang on. What? Wasn't this the entire point of bringing AI into newsrooms? To free them up to do the more important work?

Lead author Neil Thurman – a senior professor and research fellow with City, University of London and LMU Munich – explained this finding to JUK via email:

Frequent AI users may feel swamped by low-level work because AI brings its own donkey work — from data cleaning to prompt-writing — or simply because those already buried in drudgery are more likely to turn to AI in hopes of easing the load.

Anyone who has tried to use a genAI to draft a news story or news image might understand this. It doesn't always cooperate, it can't always read the documents you upload, and – of course – you've got to watch it like a hawk in case it jumps to the wrong conclusion.

But there's a deeper point: that those doing 'low-level' AI work are more likely to continue using AI.

Doherty-Cove explained that this is by design. In the case of its AI-assisted reporters, these are intentionally siloed roles trained and onboarded to do all the nitty-gritty AI stuff. Meanwhile, their colleagues can focus on producing original journalism.

Simply put: AI-assisted reporters don't do what could be considered "complex or creative tasks". For example, they use an in-house AI-powered CMS called Copy Creator to rewrite run-of-the-mill content (press releases, planning applications and community updates) into stories, and then check whether the facts and quotes are accurate.

"The Reuters Institute findings highlight exactly why a thoughtful approach to AI is so important. They suggest that without careful planning, the implementation of AI can introduce new kinds of routine work rather than easing the overall load," Doherty-Cove says in an email.

"At Newsquest, our experience has been different precisely because we have taken a more deliberate approach.

"'Lower-level' tasks are essential to producing the newspapers and day-to-day coverage our readers expect, and those tasks do not disappear. What we have done is introduce AI-assisted roles specifically so that this important work can be done more efficiently, without pulling other reporters away from original journalism."

So, AI can save journalists time – if you consider that the ones who don't use the technology, then don't have to do grunt work.

But does AI actually help ordinary journalists working on the big stories? Doherty-Cove says yes, with a caveat.

"AI has the potential to support everyone in the newsroom, not just those working on routine tasks. We have already seen it play a role in high-impact reporting too, for example, by helping us streamline FOI submissions and challenges, which has contributed to front-page stories.

"But that level of benefit only comes when AI is introduced carefully and thoughtfully. With the right approach, it can enhance both the essential day-to-day work and the more creative, original journalism our teams produce."

It reminds me of what AI futurist Andrew Grill said at our recent Newsrewired conference on what causes AI implementation to fail – and this is not unique to journalism, but across many sectors. He said that many businesses are tempted to introduce AI before thinking through the execution. Many then become jaded because the outcome doesn't seem worth the effort:

"I saw this in a law firm that built their own, very expensive internal tool. A partner I spoke to used it and said, 'It just didn't work, it was easier for me to do it by hand, and so I've not gone back to it.

"You've got fatigue because the tools aren't ready up front, and that's probably why 95 per cent of [AI projects] fail. The five that are working it's because there's support at the top, they've got flexible processes, things that they can change, and they're not afraid of actually rolling it out through the whole organisation."

We want to know what you think about AI in the newsroom - please respond to our poll or comment on your experiments (or lack thereof) below.