Photography techniques are now so advanced that changing the time of day in a photo can be done at the touch of a button.

But Dan Chung, video technologist and founder of Newsshooter.com, warned that computational photography poses a threat by blurring the lines of reality, speaking at a Mojofest panel yesterday (6 July 2019).

Computational photography works by using computer processes to digitally enhance a shot beyond what the lens and sensor can pick up. It means it is possible to alter facial features or skin colour of individuals, which for Chung, is dangerous footing.

"Photography as we know it is not pure. It’s never been pure," he said.

"Things we are so used to are actually abstractions from reality. Computational photography takes that one level further and allows you to do so much more."

He cites Adnan Hajj's digitally enhanced image in the aftermath of an Israeli airstrike attack in the 2006 Lebanon War as a significant precedent of image doctoring. However, there have been strides made since.

"It seems from the outside like photojournalism has been without its doctoring issues, but for a large part we have, as a professional community, figured out ways to look at images, assess images and figure out how real or not they are."

Sophisticated photo-editing software also makes once unimaginable possibilities seem like real threats. But most photo-agencies have now taken a tougher stance towards airbrushing, excessive lighting or darkening, and image editing generally, according to Chung.

"We need to be looking at verification. This is coming whether we like it or not," he said.

"There’s not much going on in terms of verifying any of this content. There are some obvious methods which can be done, such as cross-referencing, but that doesn’t help you on the finer points."

He called into question how newsrooms have dealt with photo and video content verification so far, as the biggest challenge is yet to come.

"This is coming down the track whether we like it or not. Therefore, how do we deal with it?" Chung asked.

"To be honest, if you look at how we dealt with still images, we didn’t as an industry do a particularly good job. In video, we’ve not done a particularly good job.

"I think we need to get our act together because this is a more complicated order of magnitude."

The solution coming from Rob Layton, smartphone photographer and mobile journalism educator at Bond University in Australia, is to fight fire with fire.

"There are always organic balances and checks. I think once something goes to one extreme, there’s always a pendulum. Anatomy of a Killing is a good example, where those people came together, cross-referenced and proved who those killers were," he explained.

Christopher Cohen, chief technical officer at FiLMiC, added that there are little details which indicate whether an image has been doctored, but predictably, experts are always endeavouring to make these harder to pinpoint.

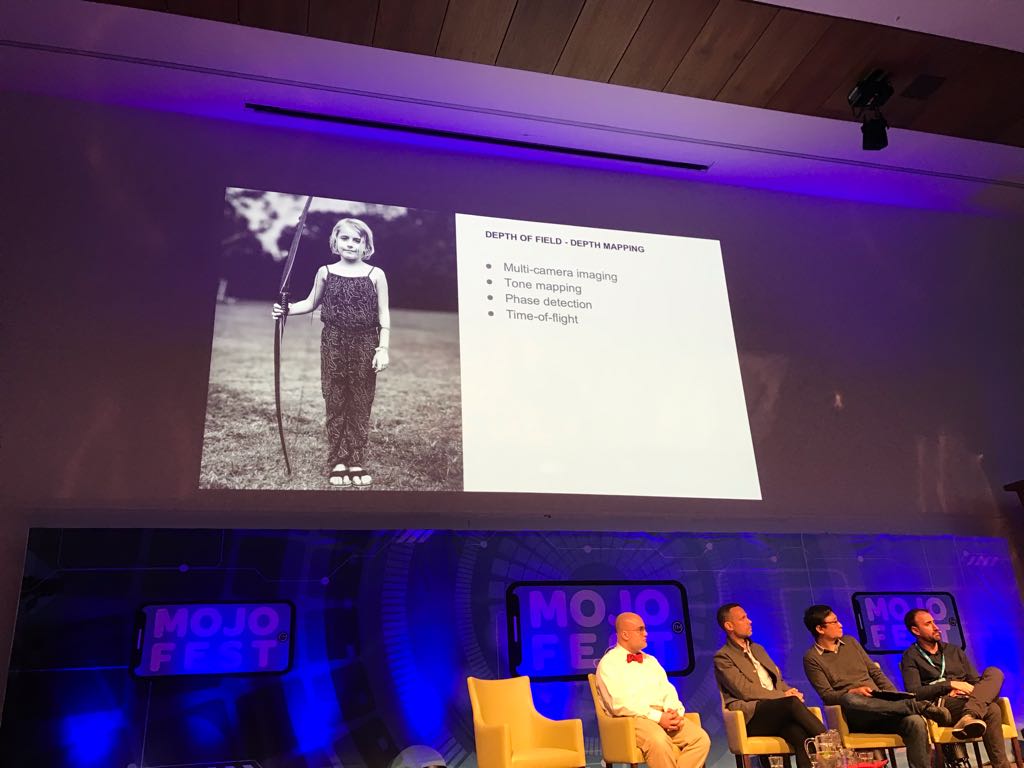

Some giveaways might be the depth of field, for example, and how a picture of a bow and arrow might not have processed string correctly (see the picture below).

Daniel Green

Other flags to look out for include tone mapping and skewed focus. These are hard to notice for an untrained eye, though.

"There are well-known defects with computational imaging," said Cohen.

"Experts consider these engineering challenges to solve and they will solve them.”

But where do we draw the line?

Layton said it is useful to look to the past for answers, reasoning that black and white photos once represented the norm. But when colour was introduced there was initial scepticism about how honest those photos were to reality.

The same logic could be applied to computational photography. Is this simply the next phase in a filtered world? Will it be as normal in the future, as coloured photography is today?

Dan Rubin, co-founder of The Photographic Journal, argued that lenses themselves have always represented a detachment from reality.

"The lenses from 150 years ago weren’t as good as now, and that is seen as imperfections in rendering.

"Now we don’t even think about the lens being a thing that alters reality. We don’t see in f1.2 and yet we’re really used to seeing motion and stills that are rendered with a massive separation in depth of field."

Filters and lenses still feel a long way from using computational photography to produce doctored images, but the rallying call from this panel seems to urge caution with how these advancements are embraced and monitored.

Free daily newsletter

If you like our news and feature articles, you can sign up to receive our free daily (Mon-Fri) email newsletter (mobile friendly).

Related articles

- New resources to help journalists fight elections misinformation

- New project InOldNews wants to improve representation in video journalism

- Predictions for journalism 2024: misinformation, online safety and press freedom

- 38 mojo apps from BBC trainer Marc Blank-Settle

- Newsguard releases Israel-Hamas fact-checking resources